介绍

本文将展示如何利用Python爬虫来实现诗歌接龙。

该项目的思路如下:

利用爬虫爬取诗歌,制作诗歌语料库;

将诗歌分句,形成字典:键(key)为该句首字的拼音,值(value)为该拼音对应的诗句,并将字典保存为pickle文件;

读取pickle文件,编写程序,以exe文件形式运行该程序。

该项目实现的诗歌接龙,规则为下一句的首字与上一句的尾字的拼音(包括声调)一致。下面将分步讲述该项目的实现过程。

诗歌语料库

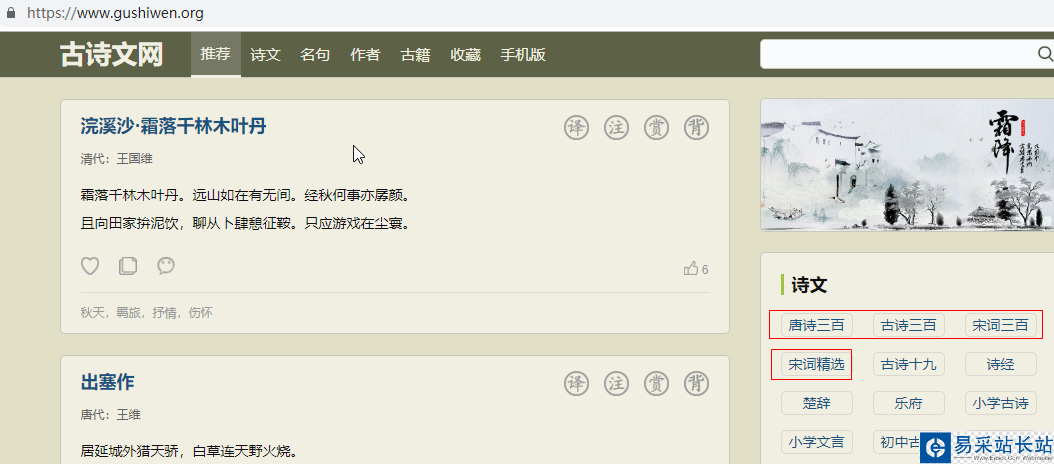

首先,我们利用Python爬虫来爬取诗歌,制作语料库。爬取的网址为:https://www.gushiwen.org,页面如下:

由于本文主要为试了展示该项目的思路,因此,只爬取了该页面中的唐诗三百首、古诗三百、宋词三百、宋词精选,一共大约1100多首诗歌。为了加速爬虫,采用并发实现爬虫,并保存到poem.txt文件。完整的Python程序如下:

import reimport requestsfrom bs4 import BeautifulSoupfrom concurrent.futures import ThreadPoolExecutor, wait, ALL_COMPLETED# 爬取的诗歌网址urls = ['https://so.gushiwen.org/gushi/tangshi.aspx', 'https://so.gushiwen.org/gushi/sanbai.aspx', 'https://so.gushiwen.org/gushi/songsan.aspx', 'https://so.gushiwen.org/gushi/songci.aspx' ]poem_links = []# 诗歌的网址for url in urls: # 请求头部 headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.87 Safari/537.36'} req = requests.get(url, headers=headers) soup = BeautifulSoup(req.text, "lxml") content = soup.find_all('div', class_="sons")[0] links = content.find_all('a') for link in links: poem_links.append('https://so.gushiwen.org'+link['href'])poem_list = []# 爬取诗歌页面def get_poem(url): #url = 'https://so.gushiwen.org/shiwenv_45c396367f59.aspx' # 请求头部 headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.87 Safari/537.36'} req = requests.get(url, headers=headers) soup = BeautifulSoup(req.text, "lxml") poem = soup.find('div', class_='contson').text.strip() poem = poem.replace(' ', '') poem = re.sub(re.compile(r"/([/s/S]*?/)"), '', poem) poem = re.sub(re.compile(r"([/s/S]*?)"), '', poem) poem = re.sub(re.compile(r"。/([/s/S]*?)"), '', poem) poem = poem.replace('!', '!').replace('?', '?') poem_list.append(poem)# 利用并发爬取executor = ThreadPoolExecutor(max_workers=10) # 可以自己调整max_workers,即线程的个数# submit()的参数: 第一个为函数, 之后为该函数的传入参数,允许有多个future_tasks = [executor.submit(get_poem, url) for url in poem_links]# 等待所有的线程完成,才进入后续的执行wait(future_tasks, return_when=ALL_COMPLETED)# 将爬取的诗句写入txt文件poems = list(set(poem_list))poems = sorted(poems, key=lambda x:len(x))for poem in poems: poem = poem.replace('《','').replace('》','') / .replace(':', '').replace('“', '') print(poem) with open('F://poem.txt', 'a') as f: f.write(poem) f.write('/n')

新闻热点

疑难解答