本文实例为大家分享了基于C#实现网页爬虫的详细代码,供大家参考,具体内容如下

HTTP请求工具类:

功能:

1、获取网页html

2、下载网络图片

using System;using System.Collections.Generic;using System.IO;using System.Linq;using System.Net;using System.Text;using System.Threading.Tasks;using System.Windows.Forms;namespace Utils{ /// <summary> /// HTTP请求工具类 /// </summary> public class HttpRequestUtil { /// <summary> /// 获取页面html /// </summary> public static string GetPageHtml(string url) { // 设置参数 HttpWebRequest request = WebRequest.Create(url) as HttpWebRequest; request.UserAgent = "Mozilla/4.0 (compatible; MSIE 8.0; Windows NT 6.0; Trident/4.0)"; //发送请求并获取相应回应数据 HttpWebResponse response = request.GetResponse() as HttpWebResponse; //直到request.GetResponse()程序才开始向目标网页发送Post请求 Stream responseStream = response.GetResponseStream(); StreamReader sr = new StreamReader(responseStream, Encoding.UTF8); //返回结果网页(html)代码 string content = sr.ReadToEnd(); return content; } /// <summary> /// Http下载文件 /// </summary> public static void HttpDownloadFile(string url) { int pos = url.LastIndexOf("/") + 1; string fileName = url.Substring(pos); string path = Application.StartupPath + "//download"; if (!Directory.Exists(path)) { Directory.CreateDirectory(path); } string filePathName = path + "//" + fileName; if (File.Exists(filePathName)) return; // 设置参数 HttpWebRequest request = WebRequest.Create(url) as HttpWebRequest; request.UserAgent = "Mozilla/4.0 (compatible; MSIE 8.0; Windows NT 6.0; Trident/4.0)"; request.Proxy = null; //发送请求并获取相应回应数据 HttpWebResponse response = request.GetResponse() as HttpWebResponse; //直到request.GetResponse()程序才开始向目标网页发送Post请求 Stream responseStream = response.GetResponseStream(); //创建本地文件写入流 Stream stream = new FileStream(filePathName, FileMode.Create); byte[] bArr = new byte[1024]; int size = responseStream.Read(bArr, 0, (int)bArr.Length); while (size > 0) { stream.Write(bArr, 0, size); size = responseStream.Read(bArr, 0, (int)bArr.Length); } stream.Close(); responseStream.Close(); } }} 多线程爬取网页代码:

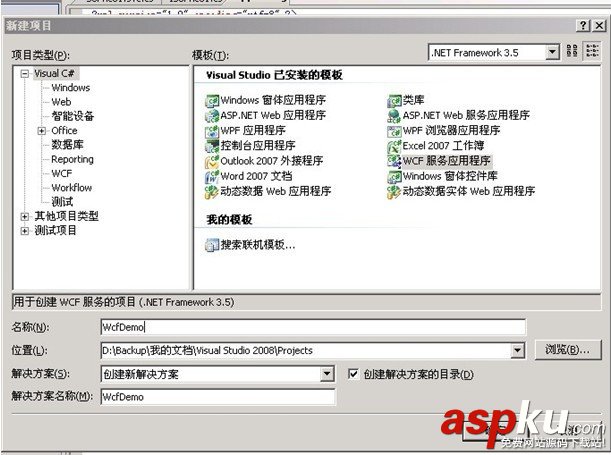

using System;using System.Collections.Generic;using System.ComponentModel;using System.Data;using System.Drawing;using System.IO;using System.Linq;using System.Text;using System.Text.RegularExpressions;using System.Threading;using System.Threading.Tasks;using System.Windows.Forms;using Utils;namespace 爬虫{ public partial class Form1 : Form { List<Thread> threadList = new List<Thread>(); Thread thread = null; public Form1() { InitializeComponent(); } private void button1_Click(object sender, EventArgs e) { DateTime dtStart = DateTime.Now; button3.Enabled = true; button2.Enabled = true; button1.Enabled = false; int page = 0; int count = 0; int personCount = 0; lblPage.Text = "已完成页数:0"; int index = 0; for (int i = 1; i <= 10; i++) { thread = new Thread(new ParameterizedThreadStart(delegate(object obj) { for (int j = 1; j <= 10; j++) { try { index = (Convert.ToInt32(obj) - 1) * 10 + j; string pageHtml = HttpRequestUtil.GetPageHtml("http://tt.mop.com/c44/0/1_" + index.ToString() + ".html"); Regex regA = new Regex("<a[//s]+class=/"J-userPic([^<>]*?)[//s]+href=/"([^/"]*?)/""); Regex regImg = new Regex("<p class=/"tc mb10/"><img[//s]+src=/"([^/"]*?)/""); MatchCollection mc = regA.Matches(pageHtml); foreach (Match match in mc) { int start = match.ToString().IndexOf("href=/""); string url = match.ToString().Substring(start + 6); int end = url.IndexOf("/""); url = url.Substring(0, end); if (url.IndexOf("/") == 0) { string imgPageHtml = HttpRequestUtil.GetPageHtml("http://tt.mop.com" + url); personCount++; lblPerson.Invoke(new Action(delegate() { lblPerson.Text = "已完成条数:" + personCount.ToString(); })); MatchCollection mcImgPage = regImg.Matches(imgPageHtml); foreach (Match matchImgPage in mcImgPage) { start = matchImgPage.ToString().IndexOf("src=/""); string imgUrl = matchImgPage.ToString().Substring(start + 5); end = imgUrl.IndexOf("/""); imgUrl = imgUrl.Substring(0, end); if (imgUrl.IndexOf("http://i1") == 0) { try { HttpRequestUtil.HttpDownloadFile(imgUrl); count++; lblNum.Invoke(new Action(delegate() { lblNum.Text = "已下载图片数" + count.ToString(); DateTime dt = DateTime.Now; double time = dt.Subtract(dtStart).TotalSeconds; if (time > 0) { lblSpeed.Text = "速度:" + (count / time).ToString("0.0") + "张/秒"; } })); } catch { } Thread.Sleep(1); } } } } } catch { } page++; lblPage.Invoke(new Action(delegate() { lblPage.Text = "已完成页数:" + page.ToString(); })); if (page == 100) { button1.Invoke(new Action(delegate() { button1.Enabled = true; })); MessageBox.Show("完成!"); } } })); thread.Start(i); threadList.Add(thread); } } private void button2_Click(object sender, EventArgs e) { button1.Invoke(new Action(delegate() { foreach (Thread thread in threadList) { if (thread.ThreadState == ThreadState.Suspended) { thread.Resume(); } thread.Abort(); } button1.Enabled = true; button2.Enabled = false; button3.Enabled = false; button4.Enabled = false; })); } private void Form1_FormClosing(object sender, FormClosingEventArgs e) { foreach (Thread thread in threadList) { thread.Abort(); } } private void button3_Click(object sender, EventArgs e) { foreach (Thread thread in threadList) { if (thread.ThreadState == ThreadState.Running) { thread.Suspend(); } } button3.Enabled = false; button4.Enabled = true; } private void button4_Click(object sender, EventArgs e) { foreach (Thread thread in threadList) { if (thread.ThreadState == ThreadState.Suspended) { thread.Resume(); } } button3.Enabled = true; button4.Enabled = false; } }} 截图:

以上就是本文的全部内容,希望对大家的学习有所帮助。